Note

Click here to download the full example code

Multi-Layer Perceptron Using Multiple Epochs¶

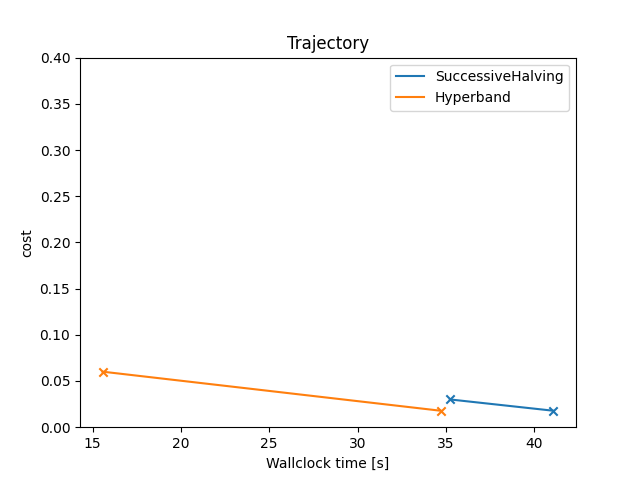

Example for optimizing a Multi-Layer Perceptron (MLP) using multiple budgets.

Since we want to take advantage of multi-fidelity, the MultiFidelityFacade is a good choice. By default,

MultiFidelityFacade internally runs with hyperband as

intensification, which is a combination of an aggressive racing mechanism and Successive Halving. Crucially, the target

function must accept a budget variable, detailing how much fidelity smac wants to allocate to this

configuration. In this example, we use both SuccessiveHalving and Hyperband to compare the results.

MLP is a deep neural network, and therefore, we choose epochs as fidelity type. This implies,

that budget specifies the number of epochs smac wants to allocate. The digits dataset

is chosen to optimize the average accuracy on 5-fold cross validation.

Note

This example uses the MultiFidelityFacade facade, which is the closest implementation to

BOHB.

[INFO][abstract_facade.py:184] Workers are reduced to 8.

[INFO][proxy.py:71] To route to workers diagnostics web server please install jupyter-server-proxy: python -m pip install jupyter-server-proxy

[INFO][scheduler.py:1615] State start

[INFO][scheduler.py:3861] Scheduler at: tcp://127.0.0.1:44661

[INFO][scheduler.py:3863] dashboard at: 127.0.0.1:8787

[INFO][nanny.py:365] Start Nanny at: 'tcp://127.0.0.1:36691'

[INFO][nanny.py:365] Start Nanny at: 'tcp://127.0.0.1:41147'

[INFO][nanny.py:365] Start Nanny at: 'tcp://127.0.0.1:39251'

[INFO][nanny.py:365] Start Nanny at: 'tcp://127.0.0.1:41897'

[INFO][nanny.py:365] Start Nanny at: 'tcp://127.0.0.1:34017'

[INFO][nanny.py:365] Start Nanny at: 'tcp://127.0.0.1:43977'

[INFO][nanny.py:365] Start Nanny at: 'tcp://127.0.0.1:33837'

[INFO][nanny.py:365] Start Nanny at: 'tcp://127.0.0.1:35615'

[INFO][scheduler.py:4213] Register worker <WorkerState 'tcp://127.0.0.1:42593', name: 3, status: init, memory: 0, processing: 0>

[INFO][scheduler.py:5466] Starting worker compute stream, tcp://127.0.0.1:42593

[INFO][core.py:867] Starting established connection to tcp://127.0.0.1:60236

[INFO][scheduler.py:4213] Register worker <WorkerState 'tcp://127.0.0.1:37935', name: 2, status: init, memory: 0, processing: 0>

[INFO][scheduler.py:5466] Starting worker compute stream, tcp://127.0.0.1:37935

[INFO][core.py:867] Starting established connection to tcp://127.0.0.1:60252

[INFO][scheduler.py:4213] Register worker <WorkerState 'tcp://127.0.0.1:35789', name: 0, status: init, memory: 0, processing: 0>

[INFO][scheduler.py:5466] Starting worker compute stream, tcp://127.0.0.1:35789

[INFO][core.py:867] Starting established connection to tcp://127.0.0.1:60250

[INFO][scheduler.py:4213] Register worker <WorkerState 'tcp://127.0.0.1:33711', name: 6, status: init, memory: 0, processing: 0>

[INFO][scheduler.py:5466] Starting worker compute stream, tcp://127.0.0.1:33711

[INFO][core.py:867] Starting established connection to tcp://127.0.0.1:60270

[INFO][scheduler.py:4213] Register worker <WorkerState 'tcp://127.0.0.1:34193', name: 7, status: init, memory: 0, processing: 0>

[INFO][scheduler.py:5466] Starting worker compute stream, tcp://127.0.0.1:34193

[INFO][core.py:867] Starting established connection to tcp://127.0.0.1:60266

[INFO][scheduler.py:4213] Register worker <WorkerState 'tcp://127.0.0.1:38785', name: 1, status: init, memory: 0, processing: 0>

[INFO][scheduler.py:5466] Starting worker compute stream, tcp://127.0.0.1:38785

[INFO][core.py:867] Starting established connection to tcp://127.0.0.1:60220

[INFO][scheduler.py:4213] Register worker <WorkerState 'tcp://127.0.0.1:36917', name: 5, status: init, memory: 0, processing: 0>

[INFO][scheduler.py:5466] Starting worker compute stream, tcp://127.0.0.1:36917

[INFO][core.py:867] Starting established connection to tcp://127.0.0.1:60280

[INFO][scheduler.py:4213] Register worker <WorkerState 'tcp://127.0.0.1:45475', name: 4, status: init, memory: 0, processing: 0>

[INFO][scheduler.py:5466] Starting worker compute stream, tcp://127.0.0.1:45475

[INFO][core.py:867] Starting established connection to tcp://127.0.0.1:60276

[INFO][scheduler.py:5226] Receive client connection: Client-b9781186-b904-11ed-8762-9b5ddbdbb7c8

[INFO][core.py:867] Starting established connection to tcp://127.0.0.1:60294

[INFO][abstract_initial_design.py:134] Using 5 initial design configurations and 0 additional configurations.

[INFO][successive_halving.py:163] Successive Halving uses budget type BUDGETS with eta 3, min budget 1, and max budget 25.

[INFO][successive_halving.py:307] Number of configs in stage:

[INFO][successive_halving.py:309] --- Bracket 0: [9, 3, 1]

[INFO][successive_halving.py:311] Budgets in stage:

[INFO][successive_halving.py:313] --- Bracket 0: [2.7777777777777777, 8.333333333333332, 25.0]

[INFO][smbo.py:298] Finished 0 trials.

[INFO][smbo.py:298] Finished 0 trials.

[INFO][smbo.py:298] Finished 0 trials.

[INFO][smbo.py:298] Finished 0 trials.

[INFO][smbo.py:298] Finished 0 trials.

[INFO][smbo.py:298] Finished 0 trials.

[INFO][smbo.py:298] Finished 0 trials.

[INFO][smbo.py:298] Finished 0 trials.

[INFO][smbo.py:298] Finished 50 trials.

[INFO][abstract_intensifier.py:493] Added config 8ea495 as new incumbent because there are no incumbents yet.

[INFO][abstract_intensifier.py:565] Added config 704d44 and rejected config 8ea495 as incumbent because it is not better than the incumbents on 1 instances:

[INFO][configspace.py:175] --- activation: 'tanh' -> 'logistic'

[INFO][configspace.py:175] --- batch_size: 214 -> 31

[INFO][configspace.py:175] --- learning_rate_init: 0.005599223654063347 -> 0.0038549874043245355

[INFO][configspace.py:175] --- n_layer: 4 -> 1

[INFO][configspace.py:175] --- n_neurons: 66 -> 87

[INFO][smbo.py:306] Configuration budget is exhausted:

[INFO][smbo.py:307] --- Remaining wallclock time: -0.1143178939819336

[INFO][smbo.py:308] --- Remaining cpu time: inf

[INFO][smbo.py:309] --- Remaining trials: 414

Default cost (SuccessiveHalving): 0.36672856700711853

Incumbent cost (SuccessiveHalving): 0.0178087279480037

[INFO][abstract_initial_design.py:69] Using `n_configs` and ignoring `n_configs_per_hyperparameter`.

[INFO][abstract_facade.py:184] Workers are reduced to 8.

/opt/hostedtoolcache/Python/3.10.10/x64/lib/python3.10/site-packages/distributed/node.py:182: UserWarning: Port 8787 is already in use.

Perhaps you already have a cluster running?

Hosting the HTTP server on port 39373 instead

warnings.warn(

[INFO][scheduler.py:1615] State start

[INFO][scheduler.py:3861] Scheduler at: tcp://127.0.0.1:34621

[INFO][scheduler.py:3863] dashboard at: 127.0.0.1:39373

[INFO][nanny.py:365] Start Nanny at: 'tcp://127.0.0.1:41925'

[INFO][nanny.py:365] Start Nanny at: 'tcp://127.0.0.1:34509'

[INFO][nanny.py:365] Start Nanny at: 'tcp://127.0.0.1:46725'

[INFO][nanny.py:365] Start Nanny at: 'tcp://127.0.0.1:42227'

[INFO][nanny.py:365] Start Nanny at: 'tcp://127.0.0.1:42783'

[INFO][nanny.py:365] Start Nanny at: 'tcp://127.0.0.1:33497'

[INFO][nanny.py:365] Start Nanny at: 'tcp://127.0.0.1:45157'

[INFO][nanny.py:365] Start Nanny at: 'tcp://127.0.0.1:45585'

[INFO][scheduler.py:4213] Register worker <WorkerState 'tcp://127.0.0.1:34553', name: 1, status: init, memory: 0, processing: 0>

[INFO][scheduler.py:5466] Starting worker compute stream, tcp://127.0.0.1:34553

[INFO][core.py:867] Starting established connection to tcp://127.0.0.1:50164

[INFO][scheduler.py:4213] Register worker <WorkerState 'tcp://127.0.0.1:36905', name: 3, status: init, memory: 0, processing: 0>

[INFO][scheduler.py:5466] Starting worker compute stream, tcp://127.0.0.1:36905

[INFO][core.py:867] Starting established connection to tcp://127.0.0.1:50174

[INFO][scheduler.py:4213] Register worker <WorkerState 'tcp://127.0.0.1:36471', name: 0, status: init, memory: 0, processing: 0>

[INFO][scheduler.py:5466] Starting worker compute stream, tcp://127.0.0.1:36471

[INFO][core.py:867] Starting established connection to tcp://127.0.0.1:50194

[INFO][scheduler.py:4213] Register worker <WorkerState 'tcp://127.0.0.1:46167', name: 7, status: init, memory: 0, processing: 0>

[INFO][scheduler.py:5466] Starting worker compute stream, tcp://127.0.0.1:46167

[INFO][core.py:867] Starting established connection to tcp://127.0.0.1:50186

[INFO][scheduler.py:4213] Register worker <WorkerState 'tcp://127.0.0.1:39117', name: 4, status: init, memory: 0, processing: 0>

[INFO][scheduler.py:5466] Starting worker compute stream, tcp://127.0.0.1:39117

[INFO][core.py:867] Starting established connection to tcp://127.0.0.1:50234

[INFO][scheduler.py:4213] Register worker <WorkerState 'tcp://127.0.0.1:40931', name: 5, status: init, memory: 0, processing: 0>

[INFO][scheduler.py:5466] Starting worker compute stream, tcp://127.0.0.1:40931

[INFO][core.py:867] Starting established connection to tcp://127.0.0.1:50226

[INFO][scheduler.py:4213] Register worker <WorkerState 'tcp://127.0.0.1:40565', name: 6, status: init, memory: 0, processing: 0>

[INFO][scheduler.py:5466] Starting worker compute stream, tcp://127.0.0.1:40565

[INFO][core.py:867] Starting established connection to tcp://127.0.0.1:50198

[INFO][scheduler.py:4213] Register worker <WorkerState 'tcp://127.0.0.1:36627', name: 2, status: init, memory: 0, processing: 0>

[INFO][scheduler.py:5466] Starting worker compute stream, tcp://127.0.0.1:36627

[INFO][core.py:867] Starting established connection to tcp://127.0.0.1:50212

[INFO][scheduler.py:5226] Receive client connection: Client-e3c83895-b904-11ed-8762-9b5ddbdbb7c8

[INFO][core.py:867] Starting established connection to tcp://127.0.0.1:50244

[INFO][abstract_initial_design.py:134] Using 5 initial design configurations and 0 additional configurations.

[INFO][successive_halving.py:163] Successive Halving uses budget type BUDGETS with eta 3, min budget 1, and max budget 25.

[INFO][successive_halving.py:307] Number of configs in stage:

[INFO][successive_halving.py:309] --- Bracket 0: [9, 3, 1]

[INFO][successive_halving.py:309] --- Bracket 1: [3, 1]

[INFO][successive_halving.py:309] --- Bracket 2: [1]

[INFO][successive_halving.py:311] Budgets in stage:

[INFO][successive_halving.py:313] --- Bracket 0: [2.7777777777777777, 8.333333333333332, 25.0]

[INFO][successive_halving.py:313] --- Bracket 1: [8.333333333333332, 25.0]

[INFO][successive_halving.py:313] --- Bracket 2: [25.0]

[INFO][smbo.py:298] Finished 0 trials.

[INFO][smbo.py:298] Finished 0 trials.

[INFO][smbo.py:298] Finished 0 trials.

[INFO][smbo.py:298] Finished 0 trials.

[INFO][smbo.py:298] Finished 0 trials.

[INFO][smbo.py:298] Finished 0 trials.

[INFO][smbo.py:298] Finished 0 trials.

[INFO][smbo.py:298] Finished 0 trials.

[INFO][abstract_intensifier.py:493] Added config c02611 as new incumbent because there are no incumbents yet.

[INFO][abstract_intensifier.py:565] Added config 704d44 and rejected config c02611 as incumbent because it is not better than the incumbents on 1 instances:

[INFO][configspace.py:175] --- activation: 'tanh' -> 'logistic'

[INFO][configspace.py:175] --- batch_size: 87 -> 31

[INFO][configspace.py:175] --- learning_rate_init: 0.0017420511057414775 -> 0.0038549874043245355

[INFO][configspace.py:175] --- n_neurons: 68 -> 87

[INFO][configspace.py:175] --- solver: 'sgd' -> 'adam'

[INFO][smbo.py:306] Configuration budget is exhausted:

[INFO][smbo.py:307] --- Remaining wallclock time: -6.066462278366089

[INFO][smbo.py:308] --- Remaining cpu time: inf

[INFO][smbo.py:309] --- Remaining trials: 437

Default cost (Hyperband): 0.36672856700711853

Incumbent cost (Hyperband): 0.0178087279480037

import warnings

import matplotlib.pyplot as plt

import numpy as np

from ConfigSpace import (

Categorical,

Configuration,

ConfigurationSpace,

EqualsCondition,

Float,

InCondition,

Integer,

)

from sklearn.datasets import load_digits

from sklearn.model_selection import StratifiedKFold, cross_val_score

from sklearn.neural_network import MLPClassifier

from smac import MultiFidelityFacade as MFFacade

from smac import Scenario

from smac.facade import AbstractFacade

from smac.intensifier.hyperband import Hyperband

from smac.intensifier.successive_halving import SuccessiveHalving

__copyright__ = "Copyright 2021, AutoML.org Freiburg-Hannover"

__license__ = "3-clause BSD"

dataset = load_digits()

class MLP:

@property

def configspace(self) -> ConfigurationSpace:

# Build Configuration Space which defines all parameters and their ranges.

# To illustrate different parameter types, we use continuous, integer and categorical parameters.

cs = ConfigurationSpace()

n_layer = Integer("n_layer", (1, 5), default=1)

n_neurons = Integer("n_neurons", (8, 256), log=True, default=10)

activation = Categorical("activation", ["logistic", "tanh", "relu"], default="tanh")

solver = Categorical("solver", ["lbfgs", "sgd", "adam"], default="adam")

batch_size = Integer("batch_size", (30, 300), default=200)

learning_rate = Categorical("learning_rate", ["constant", "invscaling", "adaptive"], default="constant")

learning_rate_init = Float("learning_rate_init", (0.0001, 1.0), default=0.001, log=True)

# Add all hyperparameters at once:

cs.add_hyperparameters([n_layer, n_neurons, activation, solver, batch_size, learning_rate, learning_rate_init])

# Adding conditions to restrict the hyperparameter space...

# ... since learning rate is only used when solver is 'sgd'.

use_lr = EqualsCondition(child=learning_rate, parent=solver, value="sgd")

# ... since learning rate initialization will only be accounted for when using 'sgd' or 'adam'.

use_lr_init = InCondition(child=learning_rate_init, parent=solver, values=["sgd", "adam"])

# ... since batch size will not be considered when optimizer is 'lbfgs'.

use_batch_size = InCondition(child=batch_size, parent=solver, values=["sgd", "adam"])

# We can also add multiple conditions on hyperparameters at once:

cs.add_conditions([use_lr, use_batch_size, use_lr_init])

return cs

def train(self, config: Configuration, seed: int = 0, budget: int = 25) -> float:

# For deactivated parameters (by virtue of the conditions),

# the configuration stores None-values.

# This is not accepted by the MLP, so we replace them with placeholder values.

lr = config["learning_rate"] if config["learning_rate"] else "constant"

lr_init = config["learning_rate_init"] if config["learning_rate_init"] else 0.001

batch_size = config["batch_size"] if config["batch_size"] else 200

with warnings.catch_warnings():

warnings.filterwarnings("ignore")

classifier = MLPClassifier(

hidden_layer_sizes=[config["n_neurons"]] * config["n_layer"],

solver=config["solver"],

batch_size=batch_size,

activation=config["activation"],

learning_rate=lr,

learning_rate_init=lr_init,

max_iter=int(np.ceil(budget)),

random_state=seed,

)

# Returns the 5-fold cross validation accuracy

cv = StratifiedKFold(n_splits=5, random_state=seed, shuffle=True) # to make CV splits consistent

score = cross_val_score(classifier, dataset.data, dataset.target, cv=cv, error_score="raise")

return 1 - np.mean(score)

def plot_trajectory(facades: list[AbstractFacade]) -> None:

"""Plots the trajectory (incumbents) of the optimization process."""

plt.figure()

plt.title("Trajectory")

plt.xlabel("Wallclock time [s]")

plt.ylabel(facades[0].scenario.objectives)

plt.ylim(0, 0.4)

for facade in facades:

X, Y = [], []

for item in facade.intensifier.trajectory:

# Single-objective optimization

assert len(item.config_ids) == 1

assert len(item.costs) == 1

y = item.costs[0]

x = item.walltime

X.append(x)

Y.append(y)

plt.plot(X, Y, label=facade.intensifier.__class__.__name__)

plt.scatter(X, Y, marker="x")

plt.legend()

plt.show()

if __name__ == "__main__":

mlp = MLP()

facades: list[AbstractFacade] = []

for intensifier_object in [SuccessiveHalving, Hyperband]:

# Define our environment variables

scenario = Scenario(

mlp.configspace,

walltime_limit=60, # After 60 seconds, we stop the hyperparameter optimization

n_trials=500, # Evaluate max 500 different trials

min_budget=1, # Train the MLP using a hyperparameter configuration for at least 5 epochs

max_budget=25, # Train the MLP using a hyperparameter configuration for at most 25 epochs

n_workers=8,

)

# We want to run five random configurations before starting the optimization.

initial_design = MFFacade.get_initial_design(scenario, n_configs=5)

# Create our intensifier

intensifier = intensifier_object(scenario, incumbent_selection="highest_budget")

# Create our SMAC object and pass the scenario and the train method

smac = MFFacade(

scenario,

mlp.train,

initial_design=initial_design,

intensifier=intensifier,

overwrite=True,

)

# Let's optimize

incumbent = smac.optimize()

# Get cost of default configuration

default_cost = smac.validate(mlp.configspace.get_default_configuration())

print(f"Default cost ({intensifier.__class__.__name__}): {default_cost}")

# Let's calculate the cost of the incumbent

incumbent_cost = smac.validate(incumbent)

print(f"Incumbent cost ({intensifier.__class__.__name__}): {incumbent_cost}")

facades.append(smac)

# Let's plot it

plot_trajectory(facades)

Total running time of the script: ( 2 minutes 45.153 seconds)