Note

Click here to download the full example code

ParEGO¶

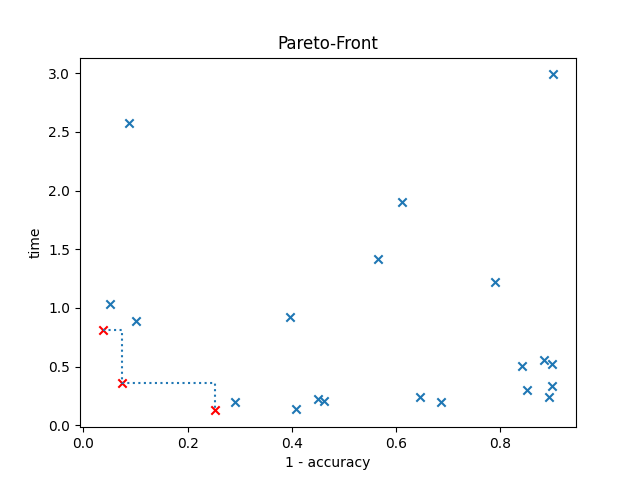

An example of how to use multi-objective optimization with ParEGO. Both accuracy and run-time are going to be optimized, and the configurations are shown in a plot, highlighting the best ones in a Pareto front. The red cross indicates the best configuration selected by SMAC.

In the optimization, SMAC evaluates the configurations on three different seeds. Therefore, the plot shows the mean accuracy and run-time of each configuration.

[WARNING][target_function_runner.py:71] The argument budget is not set by SMAC. Consider removing it.

[INFO][abstract_initial_design.py:133] Using 5 initial design and 0 additional configurations.

[INFO][intensifier.py:275] No incumbent provided in the first run. Sampling a new challenger...

[INFO][intensifier.py:446] First run and no incumbent provided. Challenger is assumed to be the incumbent.

[INFO][intensifier.py:566] Updated estimated cost of incumbent on 1 trials: 0.6158

[INFO][intensifier.py:566] Updated estimated cost of incumbent on 2 trials: 0.2876

[INFO][intensifier.py:566] Updated estimated cost of incumbent on 3 trials: 0.3437

[WARNING][abstract_runner.py:122] Target function returned infinity or nothing at all. Result is treated as CRASHED and cost is set to [inf, inf].

[WARNING][abstract_runner.py:128] Traceback: Traceback (most recent call last):

File "/opt/hostedtoolcache/Python/3.10.8/x64/lib/python3.10/site-packages/smac/runner/target_function_runner.py", line 158, in run

rval = self(config_copy, target_function, kwargs)

File "/opt/hostedtoolcache/Python/3.10.8/x64/lib/python3.10/site-packages/smac/runner/target_function_runner.py", line 231, in __call__

return algorithm(config, **algorithm_kwargs)

File "/home/runner/work/SMAC3/SMAC3/examples/3_multi_objective/2_parego.py", line 91, in train

score = cross_val_score(classifier, digits.data, digits.target, cv=cv, error_score="raise")

File "/opt/hostedtoolcache/Python/3.10.8/x64/lib/python3.10/site-packages/sklearn/model_selection/_validation.py", line 515, in cross_val_score

cv_results = cross_validate(

File "/opt/hostedtoolcache/Python/3.10.8/x64/lib/python3.10/site-packages/sklearn/model_selection/_validation.py", line 266, in cross_validate

results = parallel(

File "/opt/hostedtoolcache/Python/3.10.8/x64/lib/python3.10/site-packages/joblib/parallel.py", line 1088, in __call__

while self.dispatch_one_batch(iterator):

File "/opt/hostedtoolcache/Python/3.10.8/x64/lib/python3.10/site-packages/joblib/parallel.py", line 901, in dispatch_one_batch

self._dispatch(tasks)

File "/opt/hostedtoolcache/Python/3.10.8/x64/lib/python3.10/site-packages/joblib/parallel.py", line 819, in _dispatch

job = self._backend.apply_async(batch, callback=cb)

File "/opt/hostedtoolcache/Python/3.10.8/x64/lib/python3.10/site-packages/joblib/_parallel_backends.py", line 208, in apply_async

result = ImmediateResult(func)

File "/opt/hostedtoolcache/Python/3.10.8/x64/lib/python3.10/site-packages/joblib/_parallel_backends.py", line 597, in __init__

self.results = batch()

File "/opt/hostedtoolcache/Python/3.10.8/x64/lib/python3.10/site-packages/joblib/parallel.py", line 288, in __call__

return [func(*args, **kwargs)

File "/opt/hostedtoolcache/Python/3.10.8/x64/lib/python3.10/site-packages/joblib/parallel.py", line 288, in <listcomp>

return [func(*args, **kwargs)

File "/opt/hostedtoolcache/Python/3.10.8/x64/lib/python3.10/site-packages/sklearn/utils/fixes.py", line 117, in __call__

return self.function(*args, **kwargs)

File "/opt/hostedtoolcache/Python/3.10.8/x64/lib/python3.10/site-packages/sklearn/model_selection/_validation.py", line 686, in _fit_and_score

estimator.fit(X_train, y_train, **fit_params)

File "/opt/hostedtoolcache/Python/3.10.8/x64/lib/python3.10/site-packages/sklearn/neural_network/_multilayer_perceptron.py", line 762, in fit

return self._fit(X, y, incremental=False)

File "/opt/hostedtoolcache/Python/3.10.8/x64/lib/python3.10/site-packages/sklearn/neural_network/_multilayer_perceptron.py", line 448, in _fit

raise ValueError(

ValueError: Solver produced non-finite parameter weights. The input data may contain large values and need to be preprocessed.

[INFO][abstract_intensifier.py:340] Challenger (0.4045) is better than incumbent (0.4923) on 3 trials.

[INFO][abstract_intensifier.py:364] Changes in incumbent:

[INFO][abstract_intensifier.py:367] --- batch_size: None -> 175

[INFO][abstract_intensifier.py:367] --- learning_rate_init: None -> 0.004156370184967407

[INFO][abstract_intensifier.py:367] --- n_layer: 4 -> 2

[INFO][abstract_intensifier.py:367] --- n_neurons: 11 -> 90

[INFO][abstract_intensifier.py:367] --- solver: 'lbfgs' -> 'adam'

[INFO][abstract_intensifier.py:340] Challenger (0.1169) is better than incumbent (0.6466) on 3 trials.

[INFO][abstract_intensifier.py:364] Changes in incumbent:

[INFO][abstract_intensifier.py:367] --- activation: 'logistic' -> 'tanh'

[INFO][abstract_intensifier.py:367] --- batch_size: 175 -> 200

[INFO][abstract_intensifier.py:367] --- learning_rate_init: 0.004156370184967407 -> 0.001

[INFO][abstract_intensifier.py:367] --- n_layer: 2 -> 1

[INFO][abstract_intensifier.py:367] --- n_neurons: 90 -> 10

[INFO][abstract_intensifier.py:340] Challenger (0.2632) is better than incumbent (0.4307) on 3 trials.

[INFO][abstract_intensifier.py:364] Changes in incumbent:

[INFO][abstract_intensifier.py:367] --- n_neurons: 10 -> 13

[INFO][abstract_intensifier.py:367] --- solver: 'adam' -> 'lbfgs'

[INFO][abstract_intensifier.py:340] Challenger (0.1649) is better than incumbent (0.2812) on 3 trials.

[INFO][abstract_intensifier.py:364] Changes in incumbent:

[INFO][abstract_intensifier.py:367] --- activation: 'tanh' -> 'logistic'

[INFO][abstract_intensifier.py:367] --- n_neurons: 13 -> 15

[INFO][abstract_intensifier.py:340] Challenger (0.0832) is better than incumbent (0.1899) on 3 trials.

[INFO][abstract_intensifier.py:364] Changes in incumbent:

[INFO][abstract_intensifier.py:367] --- activation: 'logistic' -> 'tanh'

[INFO][abstract_intensifier.py:367] --- batch_size: None -> 268

[INFO][abstract_intensifier.py:367] --- learning_rate_init: None -> 0.0013420268771975475

[INFO][abstract_intensifier.py:367] --- n_layer: 1 -> 5

[INFO][abstract_intensifier.py:367] --- n_neurons: 15 -> 41

[INFO][abstract_intensifier.py:367] --- solver: 'lbfgs' -> 'adam'

[INFO][abstract_intensifier.py:340] Challenger (0.0415) is better than incumbent (0.0716) on 3 trials.

[INFO][abstract_intensifier.py:364] Changes in incumbent:

[INFO][abstract_intensifier.py:367] --- activation: 'tanh' -> 'relu'

[INFO][abstract_intensifier.py:367] --- batch_size: 268 -> 184

[INFO][abstract_intensifier.py:367] --- learning_rate: None -> 'constant'

[INFO][abstract_intensifier.py:367] --- learning_rate_init: 0.0013420268771975475 -> 0.010830691013456156

[INFO][abstract_intensifier.py:367] --- n_layer: 5 -> 2

[INFO][abstract_intensifier.py:367] --- n_neurons: 41 -> 31

[INFO][abstract_intensifier.py:367] --- solver: 'adam' -> 'sgd'

[INFO][base_smbo.py:260] Configuration budget is exhausted.

[INFO][abstract_facade.py:325] Final Incumbent: {'activation': 'relu', 'n_layer': 2, 'n_neurons': 31, 'solver': 'sgd', 'batch_size': 184, 'learning_rate': 'constant', 'learning_rate_init': 0.010830691013456156}

[INFO][abstract_facade.py:326] Estimated cost: 0.07413245521516294

Default costs: [0.62956618 0.23385032]

Validated costs from the Pareto front:

[0.0369122 0.76923291]

[0.27377592 0.15371815]

[0.07049211 0.33872724]

from __future__ import annotations

import time

import warnings

import matplotlib.pyplot as plt

import numpy as np

from ConfigSpace import (

Categorical,

Configuration,

ConfigurationSpace,

EqualsCondition,

Float,

InCondition,

Integer,

)

from sklearn.datasets import load_digits

from sklearn.model_selection import StratifiedKFold, cross_val_score

from sklearn.neural_network import MLPClassifier

from smac import HyperparameterOptimizationFacade as HPOFacade

from smac import Scenario

from smac.facade.abstract_facade import AbstractFacade

from smac.multi_objective.parego import ParEGO

__copyright__ = "Copyright 2021, AutoML.org Freiburg-Hannover"

__license__ = "3-clause BSD"

digits = load_digits()

class MLP:

@property

def configspace(self) -> ConfigurationSpace:

cs = ConfigurationSpace()

n_layer = Integer("n_layer", (1, 5), default=1)

n_neurons = Integer("n_neurons", (8, 256), log=True, default=10)

activation = Categorical("activation", ["logistic", "tanh", "relu"], default="tanh")

solver = Categorical("solver", ["lbfgs", "sgd", "adam"], default="adam")

batch_size = Integer("batch_size", (30, 300), default=200)

learning_rate = Categorical("learning_rate", ["constant", "invscaling", "adaptive"], default="constant")

learning_rate_init = Float("learning_rate_init", (0.0001, 1.0), default=0.001, log=True)

cs.add_hyperparameters([n_layer, n_neurons, activation, solver, batch_size, learning_rate, learning_rate_init])

use_lr = EqualsCondition(child=learning_rate, parent=solver, value="sgd")

use_lr_init = InCondition(child=learning_rate_init, parent=solver, values=["sgd", "adam"])

use_batch_size = InCondition(child=batch_size, parent=solver, values=["sgd", "adam"])

# We can also add multiple conditions on hyperparameters at once:

cs.add_conditions([use_lr, use_batch_size, use_lr_init])

return cs

def train(self, config: Configuration, seed: int = 0, budget: int = 10) -> dict[str, float]:

lr = config["learning_rate"] if config["learning_rate"] else "constant"

lr_init = config["learning_rate_init"] if config["learning_rate_init"] else 0.001

batch_size = config["batch_size"] if config["batch_size"] else 200

start_time = time.time()

with warnings.catch_warnings():

warnings.filterwarnings("ignore")

classifier = MLPClassifier(

hidden_layer_sizes=[config["n_neurons"]] * config["n_layer"],

solver=config["solver"],

batch_size=batch_size,

activation=config["activation"],

learning_rate=lr,

learning_rate_init=lr_init,

max_iter=int(np.ceil(budget)),

random_state=seed,

)

# Returns the 5-fold cross validation accuracy

cv = StratifiedKFold(n_splits=5, random_state=seed, shuffle=True) # to make CV splits consistent

score = cross_val_score(classifier, digits.data, digits.target, cv=cv, error_score="raise")

return {

"1 - accuracy": 1 - np.mean(score),

"time": time.time() - start_time,

}

def plot_pareto(smac: AbstractFacade) -> None:

"""Plots configurations from SMAC and highlights the best configurations in a Pareto front."""

# Get Pareto costs

_, c = smac.runhistory.get_pareto_front()

pareto_costs = np.array(c)

# Sort them a bit

pareto_costs = pareto_costs[pareto_costs[:, 0].argsort()]

# Get all other costs from runhistory

average_costs = []

for config in smac.runhistory.get_configs():

# Since we use multiple seeds, we have to average them to get only one cost value pair for each configuration

average_cost = smac.runhistory.average_cost(config)

if average_cost not in c:

average_costs += [average_cost]

# Let's work with a numpy array

costs = np.vstack(average_costs)

costs_x, costs_y = costs[:, 0], costs[:, 1]

pareto_costs_x, pareto_costs_y = pareto_costs[:, 0], pareto_costs[:, 1]

plt.scatter(costs_x, costs_y, marker="x")

plt.scatter(pareto_costs_x, pareto_costs_y, marker="x", c="r")

plt.step(

[pareto_costs_x[0]] + pareto_costs_x.tolist() + [np.max(costs_x)], # We add bounds

[np.max(costs_y)] + pareto_costs_y.tolist() + [np.min(pareto_costs_y)], # We add bounds

where="post",

linestyle=":",

)

plt.title("Pareto-Front")

plt.xlabel(smac.scenario.objectives[0])

plt.ylabel(smac.scenario.objectives[1])

plt.show()

if __name__ == "__main__":

mlp = MLP()

# Define our environment variables

scenario = Scenario(

mlp.configspace,

objectives=["1 - accuracy", "time"],

walltime_limit=40, # After 40 seconds, we stop the hyperparameter optimization

n_trials=200, # Evaluate max 200 different trials

n_workers=1,

)

# We want to run five random configurations before starting the optimization.

initial_design = HPOFacade.get_initial_design(scenario, n_configs=5)

multi_objective_algorithm = ParEGO(scenario)

# Create our SMAC object and pass the scenario and the train method

smac = HPOFacade(

scenario,

mlp.train,

initial_design=initial_design,

multi_objective_algorithm=multi_objective_algorithm,

overwrite=True,

)

# Let's optimize

# Keep in mind: The incumbent is ambiguous here because of ParEGO

smac.optimize()

# Get cost of default configuration

default_cost = smac.validate(mlp.configspace.get_default_configuration())

print(f"Default costs: {default_cost}\n")

print("Validated costs from the Pareto front:")

for i, config in enumerate(smac.runhistory.get_pareto_front()[0]):

cost = smac.validate(config)

print(cost)

# Let's plot a pareto front

plot_pareto(smac)

Total running time of the script: ( 0 minutes 45.044 seconds)